Mobile Vision, Part 2: Augmented Reality

Augmented Reality (AR) is certainly one of the hottest topics in mobile vision. It’s a buzzword for games and apps as much as it is for the two big mobile platforms. Most people know it if they see it, but let’s first talk about what AR is.

It’s important to keep in mind that AR is an umbrella term that includes many different companies and technologies. All of the AR solutions on the market will vary in some degree of capabilities, device requirements, or performance.

What is AR?

So what defines AR? We know that AR apps display the camera feed and composite (aka augment) images on top. What separates AR from stickers or other badging is that the composition is relative to the 3D space of your physical environment. In other words, as you move your phone, the composition of the imagery changes to mimic what the element would look like in the real world. For this to happen, AR software analyzes your camera feed and combines it with movements from the device to build a virtual 3D representation of your environment that composites on top of the camera feed.

How Does That Even Work

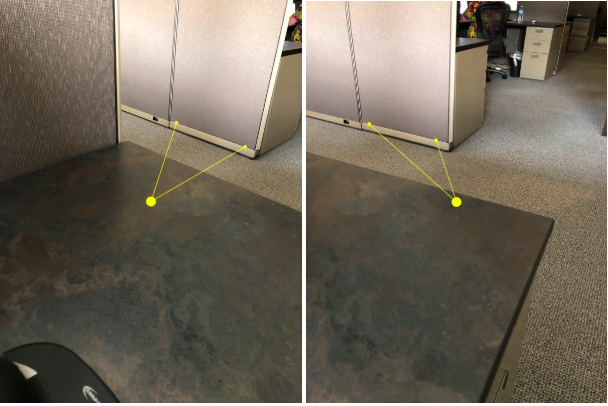

There are two essential aspects to how AR works based on the camera feed. The first part was touched on in the first article of this series: image analysis code is run to match visual elements in each frame of the camera feed. In this case, instead of faces, AR image analysis will look for features that can help in building the virtual 3D world. These features can be things like carpet patterns and lines on the edges of furniture.

Once the AR software identifies the features and can track them frame to frame, the second part of the process takes place. Remember all those games you played where you used your phone to steer a race car? Those very same sensors in your phone are used to provide the AR software with very specific movements. As you move and tilt your device, the change in rotation helps compute the distance from the camera those specific feature points sit.

Let’s look at two example images to better illustrate. In the first image, our AR system has found two spots along the base of a partition. The large center circle is the camera or device center and the lines illustrate the distance in 3D space between the center and the points. In the second image, the device has rotated to the right, yet the AR system can still track those same points and can determine their position based on how far the device actually moved.

See It In Action

Now that we have a good understanding of how an AR engine should act, let’s start with a simple demo to see what it can do. When starting an AR session, an initial understanding of the environment needs to take place. To guide the user along this process, we’ve provided some basic instruction.

In this demo, we show yellow dots where the AR engine has discovered a feature point. The feature point is something in the visual environment that it is being tracked and has a translation to the virtual 3D environment. When the AR session is done initializing, we’ll see many feature points show. As you move your camera around, more and more feature points will be added. This allows your physical environment to continually feed the 3D space in the AR engine.

Now that we have a variety of feature points, go ahead and tap near one. We’ll place a marker cube at this spot to show you where in the 3D space you tapped. We’ve also added some text to the cube which shows the distance from the camera. Notice that if you simply rotate the camera around the marker the distance doesn’t change, but if you get up and move around the distance does change.

An important part to making AR engaging is that you (or more precisely, your camera) live in the 3D environment. When the AR session was initialized, your camera was the origin of the world, but you can move from that point without disrupting the world. Our marker cube stays in the same spot while we move because of this and allows us to view all sides of it.

A Useful Demo

Let’s move on to something little more practical. Imagine you’re a manufacturer of furniture. You know that if customers see your furniture in a store, they’ll fall in love. However, online sales are lacking due to the same reason: seeing is believing. Let’s see what AR can do to solve this problem.

The key to custom AR applications is 3D content. In our imaginary furniture scenario, this is made possible by our imaginary furniture designer who works in a CAD system designing all aspects of the products. With content ready, we can turn to the flow of the AR app.

While our first demo relied on feature points, we need to leverage another part of AR systems for our furniture to work: plane detection. When an AR system starts to notice many feature points along the same plane, it can alert the app that a flat surface, like a floor, exists. We will rely on this to prompt the user to place our virtual furniture in their AR environment on a level surface.

Stepping through the app, we start by asking the user to move the phone to begin the AR scanning. After the AR engine tells us that it found a plane, we can prompt the user to place the chair. Our chair is now in the AR environment to be viewed from multiple angles. As an added bonus, the user can tap the chair and see a list of available color options for the chair.

Obviously, this is just a demo and a real application would include options like: chair rotation and movement, multiple chair placement, and taking a picture to show others what the chair will look like in your room.

One More

The previous demo helped potential customers decide on the purchase of our chair. However, in this world of flat-pack shipping, customers who purchase our chair will still have to wrestle with assembly. While black and white, multilingual (or non-lingual) instructions have guided furniture assemblers for years, maybe AR could help us again.

Just as our chair is an assembly of manufactured parts, our 3D model is a collection of smaller 3D objects. These objects can be manipulated in the AR environment to walk users through assembly steps. Let’s see how that would work.

We begin again by asking our user to scan the area for the AR session to start. Once a flat surface is available, we ask the user to select the location to place the chair. With a chair in the user’s AR world, we can guide them along the steps to assembly, starting with what the finished product looks like. Each step animates the parts of the 3D model into place and the circle arrow lets the user repeat a step if they need to review again. The end result is the assembled chair.

While we took some assembly shortcuts for this demo, it’s easy to see that a 3D assembly tutorial made possible by AR is a serious upgrade over black and white instructions on paper.

But What About…

As mentioned earlier, there isn’t an industry standard to define AR. As technology vendors look to expand their offering, they will be adding features that go beyond what we described above.

In our previous article, we mentioned how facial detection (looking for anything that looks like a face) was different than facial recognition (looking for a specific face). That nomenclature helps to define these other technologies, although you may see vendors labeling their specific offering differently:

- Image Recognition: The ability to look for a specific 2D image in your environment. The input for the AR engine would be a single image. For example, input a picture of a chess board to the AR engine and receive a recognition of it appearing in 3D space so you can populate it with chess pieces.

- Image Detection or Object Detection: Looking for objects (not flat images) in the camera feed. Involves a Machine Learning (ML) system to understand what an object could look like viewing from various angles. As an example, if you wanted an app involving recognition of coffee mugs, your test data would be dozens or hundreds of images of coffee mugs to build a data model that image analysis could use to match against any coffee mug a user may point their camera towards.

- 3D Object Detection: Like the above detection of objects but using the 3D data instead of 2D camera footage. Provide a dataset of what an object looks like in 3D and get notified when the AR engine recognizes that object in its analysis of your environment.

We’ll be exploring many of these in futures articles, so stay tuned!

Download and Try

The demos shown here are published in the iOS App Store for you to play with. As we continue this series looking at other technologies under the computer vision umbrella, we’ll update the app so you can check out additional demos.

Thank you for joining us on another stop on the journey through mobile computer vision. Reach out to Hanson on our social platforms with questions or contact us to see how AR can help your business.