Mobile Vision, Part 1: Face Detection

Computer vision is the field of study around a computer’s ability to process the visual input of cameras and images. It is a rapidly evolving field built on complex math algorithms and heavy hardware requirements. While computer vision will eventually drive the eyes and brains of autonomous cars, there are more pedestrian uses available at our fingertips.

The common QR scanning app on mobile phones is a great example of computer vision. Unlike grocery store barcode scanners that use a laser, mobile phone QR scanning is all done with a camera. The QR code was designed with high contrast black and white so that a wide range of cameras with varying fidelity could use them. The origins of the QR code are well before the first mobile phones with cameras, however, mobile phones allowed their use to explode (and then settle down a bit).

In this three-part series, we are going to look at some common computer vision tools and their potential uses in your mobile apps. For this first post, we will look at face detection.

How Face Detection Works

Face detection with mobile cameras has certainly come into focus in the past several years, with apps like Instagram and Snapchat allowing users to adorn and modify their selfies. In fact, face detection has not only moved from the research center to shipping apps, it has moved from proprietary software to operating system API. Both iOS and Android offer face detection APIs for use by all developers without additional licensing fees.

There are a wide variety of algorithms and techniques for taking a photo (or frame of video) and detecting a face. To keep this blog article readable and absent of math, we’ll just generalize what’s going on. Also, one point of clarity with recent news popping up: face detection answers the question: “Is this a face?” while face recognition answers: “Whose face is this?” We are sticking with detection-only here.

Since we humans come in so many shades, the first thing to eliminate is color and to just look at the shades of potential faces. Next, let’s look at light and dark patterns. The most prominent are two rows on top of each other: a lighter forehead with darker eyes underneath. Then, looking at that lower row of the eyes, we see a dark-light-dark pattern of one eye, the nose bridge, then another eye. This lighter “T” shape of the forehead and nose is an anchor point for many algorithms that helps eliminate problems with mustaches and beards in the lower half of the face.

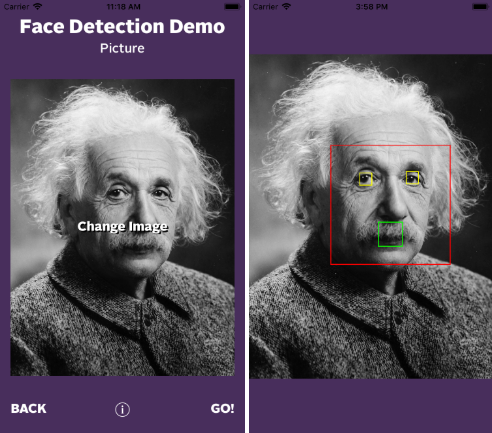

Let’s take a look at what happens when we feed a portrait to the face detector. The following examples are shown using Apple’s face detector in iOS. The face detector supplied by Google in Android works in a similar fashion. We’ll start with a well-known photo of a famous nerd (photo 1) and run it through to see what is returned (photo 2).

So in this demo, we highlight what the face detection gives us. The first thing we see is the large, red rectangle that encompasses the face. Next, we see two yellow rectangles for the eyes and one green one for the mouth.

Taking a closer look at the face rectangle, we see that it seems pretty accurate on the bottom, stopping right at the bottom of his chin. For the left side, the edge cuts off around where most would consider the face meets the side of the head. The right side is a little different in that it extends beyond his head, but it does present the eyes and mouth centered in the face rectangle. The top of the face rectangle possibly struggles with Mr. Einstein’s receding hairline but still pretty close to where we expect the face to end. Lastly, the spots for the eyes look quite accurate while the mouth is only a location of the center.

Overall, the face detection is pretty accurate. We have a fairly tight location of the face (i.e. doesn’t include the whole head) but may extend beyond if we’re not looking straight on. The eye locations look great and the mouth seems to be a little less reliable but that’s to be expected with the various poses we can hold with our mouth. By the way, if that face rectangle reminds you of the highlight as you take a photo with your phone, it’s likely using the same face detector.

In general, this is what we see from image face detectors. We don’t get an exact match of what pixel is the face and what isn’t, but instead get a general area. This helps us account for differing physical features as well as different angles of the face in the photo.

Building Face Detection Into Your App

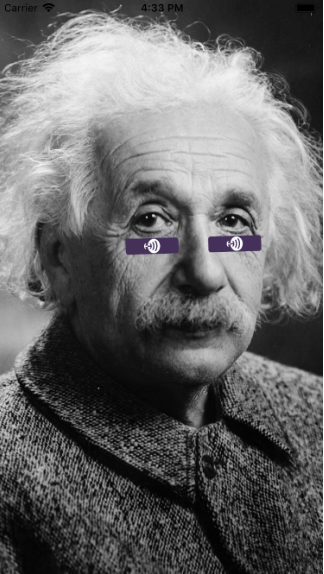

Now let’s take a look at what we can do with this data. Knowing that the eyes are a great anchor point in face detection allows us to play around with some things. We take our team approach to Hanson very seriously and it’s important for us to show clients that we are ready for their work with a proper game face. Mr. Einstein feels the same way and dons eye black in preparation:

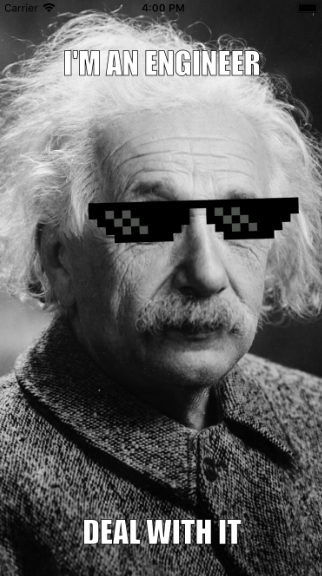

But there is a lighter side to Hanson and we like to embrace all things Internet, including meme culture:

Both of these examples used the data from the face detector to composite what you see. These demos work with both still and video images and can pick out one or more faces.

While the above shows some clever uses of face detection that might add fun and whimsy to a branding app, there are some great utility uses for a wider group of apps. If your app allows users to create an avatar, then face detection can streamline that process. For apps where a user can select an existing picture for an avatar, face detection can be used to automatically crop and center the picture to the user’s face. Or if your app allows a user to take a new picture, face detection could provide visual guides over the camera feed to make sure the user’s face stays center-in-frame for the clearest avatar picture.

Download and Try

The demos shown here are published in the iOS App Store for you to play with, found here: <https://apple.co/2sz49zl>. As we continue this series looking at other technologies under the computer vision umbrella, we’ll update the app so you can check out additional demos.

Thank you for joining us on this first stop on the journey through mobile computer vision. Stay tuned for future articles exploring augmented reality, object detection, and more!